Some notes from a spotlight talk for Frome tech shed. (This post a bit of a work in progress - I’ll be adding links and images shortly.)

A computer-history response to Hollie’s talk:

[Spotlight] Parallel computing - SIMD with shared and distributed memory architectures.

Let’s consider three overlapping ages of machine:

1 the mechanical age

2 the serial age

3 the modern age

We’ll consider arithmetic, and storage, and then computation more generally

As a hint of context, we can view computer history as a series of iterations of ‘can we make it work’ and ‘can we make it work faster’ (and ‘cheaper’ and ‘more reliable’) - as a consequence, lots of inventions happen earlier than you might expect, and get re-used or re-invented later. In the ‘can we make it work’ phase, early on when hardware is expensive, things tend to be done in a pedestrian, which is to say a serial way. In the ‘can we make it faster’ phase, especially later on when hardware is less expensive, things are overlapped or made independent and proceed in parallel.

Part 1: Parallelism in the mechanical age

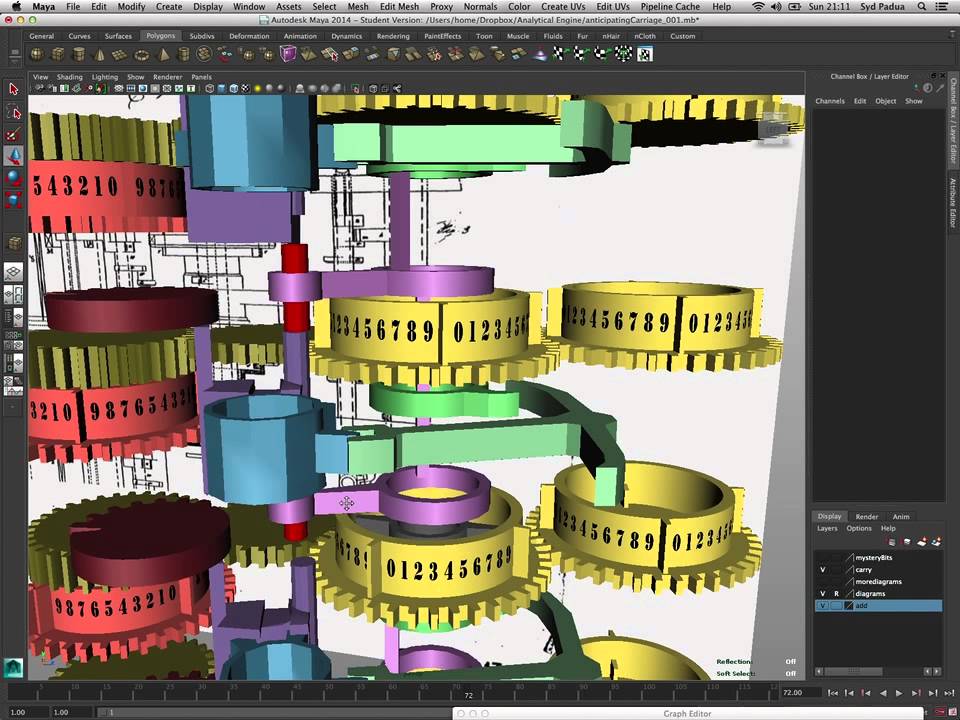

1830’s Babbage’s Anticipating Carriage - never built, but not forgotten. A mechanism to propagate an impeding carry across several digits in one movement, speeding up addition. Here’s an animated 3D model, to explain, by Sydney Padua:

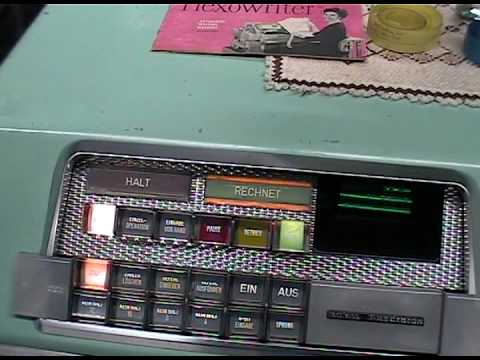

1930’s Zuse’s Z3, 1940’s Z4

First mechanical, and then electro-mechanical using relays. Konrad Zuse was a pioneer eventually starting a computer company. Both Z3 and Z4 serial machines… according to Wikipedia. But maybe not entirely, judging by comments in his autobiography (The Computer: My Life).

1950’s the multi-Curta machines - a handheld mechanical calculator adapted to run vector calculations, by ganging several and rotating the actuator of all of them together. (I think I might have seen a doubled-up desktop mechanical calculator too.)

Part 2: Parallelism in the serial age

Serial storage - the first and only storage, storage being a difficult practical problem. We might wonder why RAM is called random access, and it’s because there’s no constraint on which addresses can be accesses in sequence. With serial storage, you have to wait for the location of interest to pass by. The placement of data affects the latency and therefore the performance.

Turing suggested gin could be used for computer memory, although mercury was the eventual winner. Piezo crystals send soundwaves down a tube of mercury, and the pulse train is picked up and reconstitued at the far end. EDSAC, coffin, mercury

1940s Alan Turing, a clear writer and communicator:

“The full storage capacity of the ACE available on Mercury delay lines will be about 200,000 binary digits. This is probably comparable with the memory capacity of a minnow.”

From a Lecture to the London Mathematical Society on 20 February 1947 (14 page PDF, well worth a read!)

Nickel wire, Elliott machines

1950s The drum machines, the story of Mel, instruction sets with goto on every instruction, to allow for “optimal programming”, which assumes programmers are cheap compared to run time.

1956 LGP-30 librascope and Bendix G-15

Drum spins up to 3600rpm, the drum rotation determines the tempo of all parts of the machine:

Note the front panel is literally a small oscilloscope: tracing signals over time is the same thing as seeing all the bits in a word.

Manchester Mk 1 (“Baby”) used cathode ray tube memory. A 2D grid of spots, accessible at random. Theoretically possible to gang several up to make word-accessible memory, but not reliable enough.

1950s The Booths with their parallel multiplier - it was thought it couldn’t be done. Kathleen Booth the programmer and Andrew Booth the hardware engineer. Even today, most multipliers inherit from this invention, and multiplication is no longer very very much slower than addition.

“the APEX, an all-purpose electronic X-ray calculator, which had significantly higher precision of 32 bits, and a 1-kiloword magnetic drum. Booth claimed that the major accomplishment of the APEX was the implementation of a non-restoring binary multiplication circuit which von Neumann had claimed to be impossible claimed”

1960s The Elliott 803 is bit-serial but has a wide word and fits two instructions in a word. So that can be viewed as the beginnings of modern machines which fetch and decode whole cache-lines worth of instructions.

I forgot to mention core memory! This was cheap, reliable, manufacturable, and easy to stack several planes to make a word-addressed memory. The end of bit-serial for main memory - but we still have bit-serial disks, and communications.

1960s DEC’s bit-serial PDP-8/S as cost reduction (quarter the price of the original, but rather slow.) A slow computer you can afford - is that better than a fast one which you can’t?

1970s Ferranti F100-L microprocessor with parallel memory, serial ALU - 16 bit machine, limited chip size. Could only afford enough transistors to make a serial ALU, but as it was clocked much faster than the external (conventional, parallel) memory, there wasn’t too much of a performance hit.

Part 3: parallelism in the modern age

(1960) GE 225 had a two-cabinet auxiliary processor, an FPU in effect.

1960s

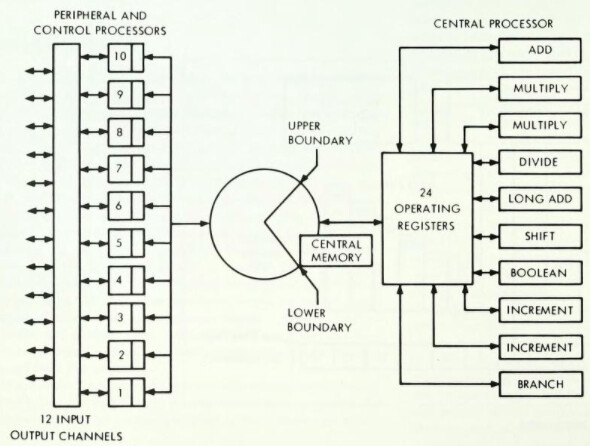

cdc6600 - see Gordon Bell’s book: Computer structures: principles and examples

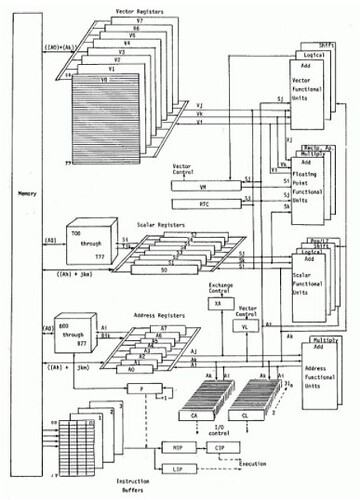

Cray and CDC and memory interleaving… see here for example 1988 Cray XMP or here for the 1976 Cray-1: